As AI continues to transform industries, businesses must navigate a complex regulatory environment while balancing innovation with compliance. In this report we highlight current legal frameworks and offer strategic insights for companies seeking to leverage AI responsibly. By understanding the regulatory landscape and implementing robust compliance measures, organizations can harness the potential of AI while safeguarding against legal and ethical pitfalls.

European AI Regulatory Frameworks

Globally, the European Commission has taken the lead in regulating AI through the following laws:

- AI Regulatory: AI Act

- Personal Data Privacy and Protection: EU General Data Protection Regulation (GDPR)

- Intellectual Property: Copyright Directive, etc.

- Data Sharing and Cloud Services: Data Act

- Cybersecurity Act: Network and Information Systems Directive

- Online Platforms: Digital Services Act

- Antitrust: Digital Markets Act

- And much more

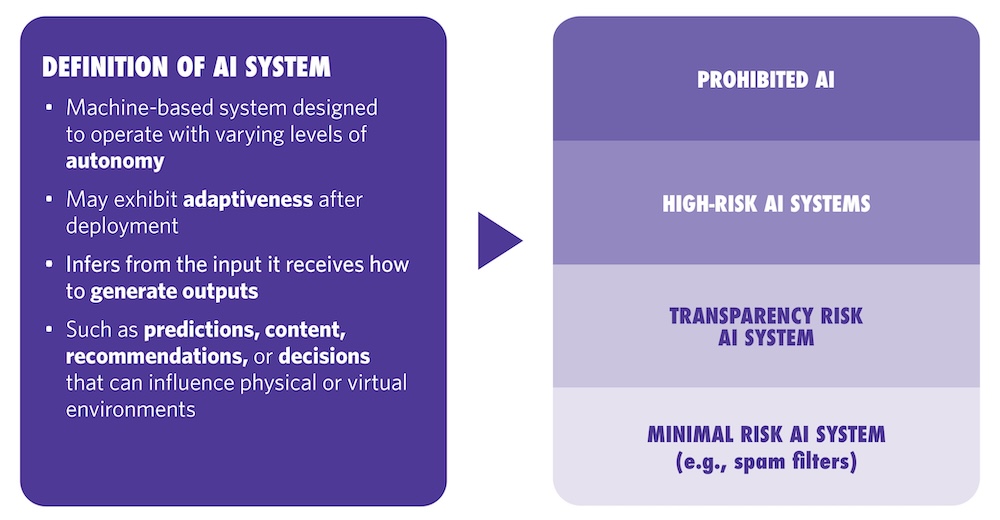

The EU AI Act has entered into force as the world’s first comprehensive AI-focused law. It is intended to apply to companies with a physical presence in the European Economic Area (EEA) and notably to companies without a physical presence in the EEA in certain circumstances. The act regulates two types of AI: foundational models (general-purpose AI models, or GPAI models) and applications built on GPAI models (AI systems):

The AI Act imposes a tiered set of obligations based on the risk associated with different GPAI models and AI systems, ranging from minimal-risk to high-risk and prohibited categories:

(Click to enlarge)

Importantly, the European Commission on November 19, 2025 published its “digital omnibus” legislative proposals, which seek to amend multiple major EU digital regulatory laws, notably the AI Act and the GDPR. These proposals, if enacted into law, are intended to ease AI-related compliance obligations, including by (for example):

- Deferring the date on which the AI Act’s rules relating to “high-risk” AI systems come into effect

- Allowing providers of GPAI models additional time to update documentation and processes

- Narrowing the scope of what information is considered “personal data” under the GDPR

- Making it easier to train GPAI models on personal data that is subject to the GDPR

These proposals, which are welcome news for companies active in the EU, will be considered in more detail early in 2026, and there is no guarantee that these will be enacted into law.

The United Kingdom, which has for several years adopted (relative to the EU) a compliance-lite approach to AI regulation, published its AI Opportunities Action Plan. The plan seeks to position the nation as a global leader in AI technology, leveraging both private and public sector solutions to enhance public services and drive economic growth. The plan emphasizes the creation of data centers and technology hubs, with a focus on AI safety and regulation that aligns with pro-growth ambitions.

United States AI Regulatory Frameworks

The United States has taken a bold step with the release of “America’s AI Action Plan,” which outlines a strategic framework for securing the nation’s dominance in AI through innovation, infrastructure development, and international diplomacy. The plan marks a significant shift toward a deregulated environment, encouraging private sector–led innovation while emphasizing the importance of AI systems being free from ideological bias. The plan also highlights the need for rapid AI skill development and the establishment of regulatory sandboxes to accelerate AI deployment in critical sectors.

State-level initiatives in the United States, such as California’s AI transparency law and Texas’s Responsible AI Governance Act, reflect a growing recognition of the need for tailored AI regulations that address specific risks while fostering innovation. These efforts are complemented by the Federal Trade Commission’s Operation AI Comply, which aims to address deceptive AI practices and ensure consumer protection. The US Securities and Exchange Commission’s focus on cybersecurity and AI underscores the importance of regulatory vigilance in safeguarding against potential risks.

The White House’s December 2025 executive order marks a major shift toward a unified national policy framework for AI, with broad implications for technology companies, state governments, and regulated industries. It aims to establish a minimally burdensome national standard for AI policy, limiting state-level regulatory divergence.

Asian AI Regulatory Frameworks

In Asia, Singapore’s Infocomm Media Development Authority launched the Model AI Governance Framework for Generative AI to address concerns and facilitate innovation in generative AI (GenAI). India is actively shaping its AI regulatory landscape with initiatives and guidelines for responsible AI development and deployment, but lacks specific AI laws. India is also considering AI-related laws to serve as a companion to its Digital Personal Data Protection Act 2023.